Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Introduction: Making Sense of Public Engagement AI

The topic of public interaction with artificial intelligence (AI) has become one of the urgent issues due to the dynamic development of technology. When discussing the role of AI in society, the value of evidence-based solutions and diverse viewpoints are frequently mentioned with the aim of making it inclusive of traditionally marginalized groups and and informed engagement of the general population.

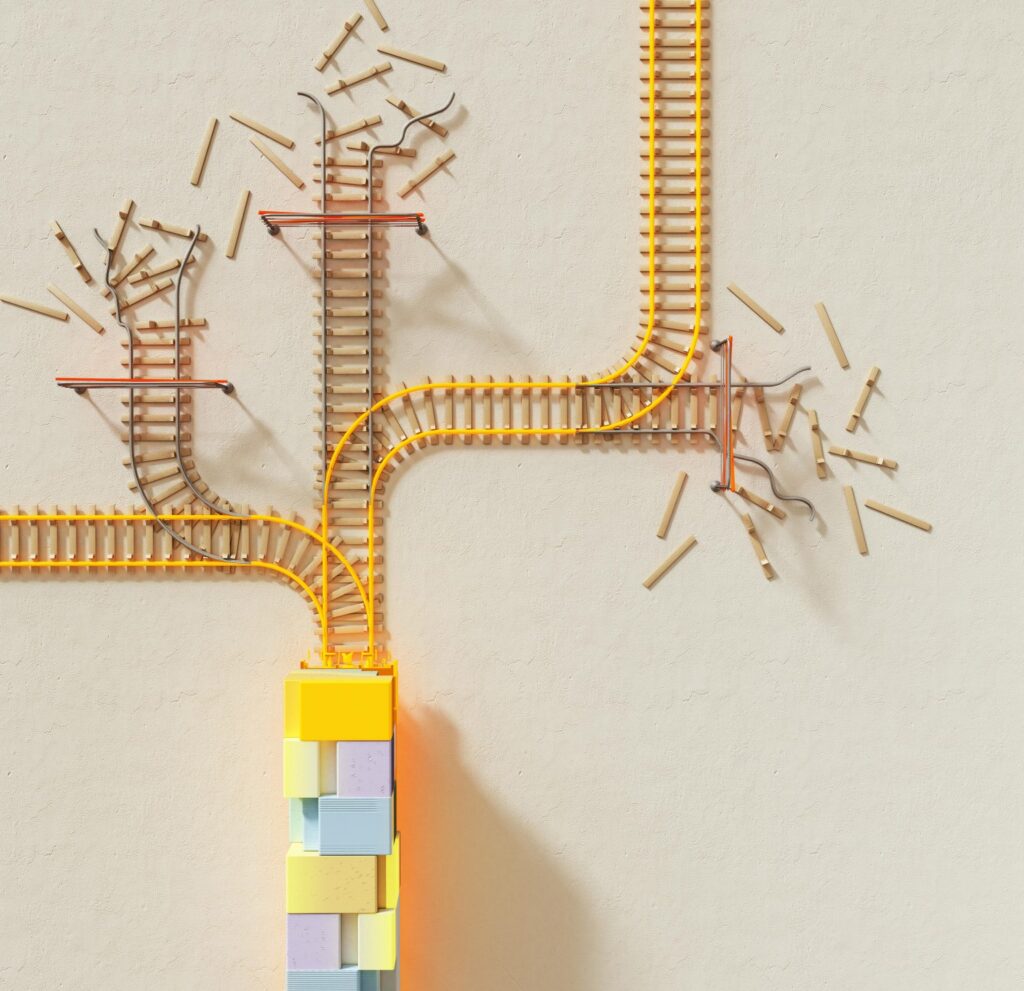

However, the issue of representative and meaningful public participation is more complicated than it seems to be simply raising the representative or encouraging open discussions. The difficulties are more fundamental, entailing serious communication difficulties, technical terminology, and differences in understanding between AI researchers and the rest of the world. The only way to make sure that the development of AI is consistent with the values of society, in general, is to address these gaps efficiently.

Within and Without the AI Bubble: Hearing All the Voices.

The most recent discourse, which involves impactful webinars organized by social scientists, emphasizes the necessity to give voice to the underrepresented groups that are either adversely affected or disproportionately affected by AI technologies. Such workshops focus on evidence-based measures and community participation in different ways to develop inclusive AI policies and practices.

An important yet frequently neglected critical dimension is, nevertheless, voices within the AI research community itself. The effective way of engaging AI researchers implies ensuring the bilateral communication channels, where people who are more on the inside of AI technicalities are equally able to express and understand outside opinions. This closing of the gap between internal and external dialogue allows the stakeholders to overcome the presented complexities and possible ethical issues that AI technologies bring.

Beyond the Two Worlds: The Expert in AI and the Layperson

A curious difficulty occurs in trying to establish communication between AI specialists and ordinary people. As an example, when browsing the social media Bluesky or Twitter idly, it is easy to find oneself in a conversation full of extremely specialized terminology, like vector mathematics, neural network projections, gradient computations, and multidimensional optimization. These discussions may be incomprehensible to laypersons.

This technical terminology is not merely an elitist jargon but indicative of the highly complex and accurate nature of the field of AI research, in which some of the most important insights can be gained through highly abstract, mathematical formalisms. These complexities are imperative to being able to even have a conversation with AI researchers, however, this comes with a lot of prerequisite knowledge. The challenge, then, is to render those abstractions into familiar stories without losing the meaning.

Disillusioning AI Alignment: The Technical Basis and Practical Implication

Such complexity is manifested through the AI alignment concept. In the popular imagination, alignment means making AI systems safe and beneficial to humans. But scientists look at this in very technical and mathematical directions, discussing such issues as the hacking of rewards, inner alignment, and distributional shifts. These are not just philosophical reflections but very practical, technical problems that radically sway the behavior of AI.

In the literature, researchers talk about alignment using vectors projections, gradient computations, and loss functions, which demand a high level of mathematical literacy. As a result, the AI alignment conversation can be exclusive to laypersons, resulting in shallow or simplistic public discourse. Their explanation in simple terms through analogies and real-life application would go a long way in improving the general understanding.

Background and Philosophy of Brand: Pre-Modern AI History

The history of artificial intelligence can be traced to the early computation models of the middle 20 th century. Starting with the foundational papers of Alan Turing to the current state-of-the-art architectures of AI like deep learning, every development has presented new complex mathematical and computational methods. This historical development has a profound effect on the current communication patterns and conceptual vocabulary of AI researchers.

Furthermore, present-day AI research centers, such as the well-known Google DeepMind, OpenAI, and Anthropic, focus on theoretical frameworks and extensive mathematical modeling as a core of their approach. Such methods have developed an internal culture of mathematical rigor and conceptual clarity, but have accidentally alienated popular understanding. By pointing out these historical processes and philosophical foundations, it is possible to find a compromise between AI researchers and the general audience.

Communication barriers such as Technical difficulties.

The very fact that AI operates mostly on the basis of mathematical and algorithmic complexity is both its strength and an obstacle. It is such technicalities which spur innovation, but which also limit effective public participation. In this regard, there needs to be a renewed effort in science communication, the type that demystifies some of the complicated mathematical concepts without doing them injustice.

Efforts like AI explainability frameworks, dumbed-down yet precise education, and hearty dialog forums with interdisciplinary cooperation between AI researchers, social scientists, and communicators would go a long way towards reconciling these gaps. Also, compressing the complicated concepts of AI through compelling narration formats and interactive media may be of great benefit in simplifying the concepts to wider audiences.

Mutual Understanding: On the Way to the Improved AI Policy and Practice

The popular AI discussion tends to focus on applications that are easy to conceptualize, like self-driving cars and chatbots, as opposed to the underlying problems of alignment and optimization that researchers work on. The solutions to this disconnect lie in efforts to arrive at understanding one another:

Educational Outreach: Creating all-around educational programs and resources that de-baffle the various AI terms and concepts featuring hands-on demonstrations and familiar situations.

Interdisciplinary Collaboration: Promoting collaborative models where AI researchers and social scientists can communicate findingsids with each other and engage the general population using simple terms.

Improved Public Forums: Developing a formal conversation where scientists/researchers discuss their research in a language that people can relate to and the people on the street clearly express their apprehensions and views. These forums may either be virtual webinars, community roundtables, or public exhibitions.

Closure: AI Communication Unpuzzled

To make the public engagement with AI meaningful, it is necessary to realize the depth of linguistic and conceptual gaps between the researchers and the rest of the audience. Although it is certainly crucial to amplify a variety of voices, it is also vital to ensure that the findings of the highly technical research center that AI is get clearly explained.

This bilateral strategy is possible to realize, which can make the public involvement more enriched and AI development more authentic in reference to the values and concerns of society as well as its benefits. The path to crossing these divides will define not only the popular perception but also the conscientious creation and regulation of artificial intelligence in years to come resulting in a more accommodative and better informed society.